Arcadia Whole School AI Policy 2025

1. Introduction

Purpose of the Policy:

The purpose of this policy is to establish comprehensive guidelines for the ethical use of Artificial Intelligence (AI) within Arcadia British School. This policy aims to ensure that AI is used responsibly to enhance learning and teaching while maintaining academic integrity and preventing misuse. The guidelines presented here are informed by the IB Academic Integrity Guidelines, Niti National Strategy for AI, UNESCO AI Policy Makers Guidance, and the Cambridge Handbook 2023.Scope and Application:

This policy applies to all students, teachers, and staff within Arcadia British School. It encompasses the use of AI in all academic work, including coursework, research, and assessments. The policy outlines the responsibilities of students, teachers, and school administration in the ethical use of AI, procedures for handling malpractice, and the necessary training and capacity building to support effective and responsible AI integration in our schools.2. Principles and Objectives

Ethical Use of AI:

The use of AI within Arcadia British School. Schools must align with the highest ethical standards and respect for human rights. As guided by the UNESCO AI Policy Makers Guidance, we are committed to ensuring that AI technologies are implemented in ways that are fair, transparent, and respect the privacy and autonomy of all individuals. This includes:- Ensuring AI applications do not perpetuate biases or discrimination.

- Protecting the privacy of students and staff by adhering to data protection regulations.

- Making transparent the use of AI tools in educational processes and decisions.

Promoting Academic Integrity:

Maintaining academic integrity is paramount in our use of AI technologies. In line with the IB Academic Integrity Guidelines and the Cambridge Handbook 2023, we aim to prevent plagiarism and ensure that all student work submitted for assessment is authentic and verifiable. To achieve this, we will:- Require students to clearly acknowledge and reference any use of AI tools in their work.

- Equip teachers with the tools and knowledge to detect and address AI-generated content that has not been appropriately cited.

- Implement robust processes for the authentication of student work, ensuring that it reflects the individual’s true understanding and capabilities.

Enhancing Educational Outcomes:

AI technologies offer significant potential to enhance educational outcomes by supporting learning and improving the quality of education. Following the Niti National Strategy for AI, our objectives include:- Leveraging AI to personalise learning experiences, enabling tailored instruction that meets the unique needs of each student.

- Using AI-driven analytics to identify learning gaps and provide timely interventions to support student success.

- Enhancing teaching methods through AI-powered tools that assist in curriculum development, assessment, and feedback processes.

- Promote Ethical AI Use:

- Develop and disseminate clear guidelines on the ethical use of AI for students and staff.

- Conduct regular training sessions to ensure all stakeholders are aware of ethical considerations in AI use.

- Support Academic Integrity:

- Incorporate AI literacy into the curriculum to help students understand the capabilities and limitations of AI.

- Use AI and other technologies to detect and prevent plagiarism, ensuring all academic work is genuine and original.

- Enhance Learning and Teaching:

- Implement AI tools that aid in personalised learning plans, offering support tailored to each student’s learning style and pace.

- Provide teachers with AI resources that streamline administrative tasks, allowing more time to focus on student engagement and instruction.

3. Definitions

Artificial Intelligence (AI):

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think and learn like humans. AI technologies encompass a wide range of applications, including but not limited to machine learning, natural language processing, computer vision, and robotics. These technologies can analyse data, recognise patterns, make decisions, and even perform tasks that typically require human intelligence.Examples of AI Technologies:

- Machine Learning: Algorithms that allow computers to learn from and make predictions or decisions based on data. Examples include recommendation systems (like those used by Netflix) and predictive analytics.

- Natural Language Processing (NLP): Enables machines to understand and respond to human language. Applications include chatbots, language translation services, and voice-activated assistants like Siri or Alexa.

- Computer Vision: The ability of machines to interpret and make decisions based on visual inputs from the world. Examples include facial recognition systems and autonomous vehicles.

- Robotics: Machines that can carry out complex tasks autonomously or semi-autonomously, such as manufacturing robots and medical surgery robots.

Generative AI:

Generative AI refers to AI systems that can generate new content, such as text, images, sound, or video, based on the data they have been trained on. This type of AI is particularly relevant in the context of student work and assessments.Specific Focus on Generative AI (Cambridge):

- Generative AI in Coursework: According to the Cambridge Handbook 2023, the use of generative AI for creating content must be carefully monitored and properly acknowledged. Examples of generative AI include text generation tools like OpenAI’s GPT-3, image creation tools like DALL-E, and music composition tools like Amper Music.

- Acceptable Uses: Students may use generative AI to support initial research, generate ideas, and create brief quotations, provided these uses are clearly acknowledged and critically evaluated in their work.

- Unacceptable Uses: Submitting AI-generated content as one’s own without proper acknowledgement is considered academic malpractice. Extensive use of AI-generated content without critical engagement or citation is also prohibited.

Plagiarism and Academic Malpractice:

Plagiarism and academic malpractice are serious offences that undermine the integrity of educational assessments.Definitions and Examples (IB):

- Plagiarism: Plagiarism is the act of presenting someone else’s work or ideas as one’s own without proper acknowledgment. This includes copying text, ideas, images, or data from any source, including AI-generated content, without giving appropriate credit.

- Examples of Plagiarism

- Submitting a piece of work copied from a book, website, or another student without citation.

- Using AI tools to generate essays or assignments and presenting them as original work.

- Failing to use quotation marks for direct quotes or not providing a bibliography for referenced works.

- Academic Malpractice: Academic malpractice extends beyond plagiarism to include any behaviour that gives a student an unfair advantage or compromises the integrity of the assessment process.

- Examples of Academic Malpractice:

- Cheating during exams, such as using unauthorized materials or receiving help from others.

- Fabricating data or citations in academic work.

- Collaborating with others on assignments meant to be completed individually.

Preventing Plagiarism and Malpractice:

To uphold academic integrity, it is crucial to educate students and staff about the definitions and consequences of plagiarism and academic malpractice. The IB Academic Integrity Guidelines and Cambridge Handbook 2023 provide comprehensive frameworks for identifying, preventing, and addressing these issues. Teachers must supervise students closely, use plagiarism detection tools, and ensure all work submitted is authenticated as the student’s own. By clearly defining these terms and providing concrete examples, we aim to foster a culture of honesty and integrity within our educational community, ensuring that all assessments are fair, transparent, and reflective of each student’s true abilities.4. Guidelines for AI Use in Academic Work

Acceptable Use of AI:

AI technologies can be valuable tools for enhancing the educational experience when used responsibly and ethically. The following guidelines, informed by the Cambridge Handbook 2023, outline acceptable uses of AI in academic work:- Initial Research:

- AI can be utilised to gather preliminary information and support the initial stages of research. This includes using AI tools to identify relevant sources, summarise information, and generate ideas.

- Proper Acknowledgment:

- Any use of AI for research purposes must be clearly acknowledged in the student’s work. Students should document the specific AI tool used, including its name, version, and the date it was accessed.

- Students must cite AI-generated content as they would any other source, ensuring transparency and academic integrity.

- Brief Quotations:

- AI-generated content may be incorporated into academic work as brief quotations to support arguments or provide examples. However, it is essential that these quotations are critically analysed and properly cited.

- Critical Analysis and Citation:

- Quotations from AI-generated content should be limited to a few sentences (e.g., two or three sentences or about 50 words).

- Students must engage in a critical discussion of the AI-generated material, contextualising and evaluating it within their own analysis.

- Clear attribution must be provided, including the AI tool used and the prompt that generated the content.

- Supporting Learning Processes:

- AI tools can enhance learning by providing personalised educational experiences, aiding in problem-solving, and offering additional resources. However, the primary work must remain the student’s own.

- Maintaining Originality:

- AI can assist in the learning process by offering explanations, generating practice problems, or providing feedback, but students must ensure that their final submissions reflect their understanding and effort.

- Any AI-assisted learning activity must be documented, and the student’s own analysis and insights should form the core of their work.

Key Considerations for Acceptable AI Use:

- Transparency: Clearly indicate all instances where AI tools have been used.

- Critical Engagement: AI-generated content should be analysed and evaluated, not just included verbatim.

- Original Contribution: Ensure that AI aids the learning process without replacing the student’s original thought and work.

- Ethical Standards: Use AI tools ethically, respecting academic integrity and the principles outlined in the IB and Cambridge guidelines.

5. Responsibilities

Students:

Ethical Use and Proper Citation:- Students are expected to use AI tools ethically and responsibly in their academic work. This includes:

- Acknowledging AI Use: Clearly state any use of AI tools in their research, coursework, or any academic activity. This involves documenting the specific AI tool used, the prompts given, and the outputs generated.

- Citing Sources: Properly cite AI-generated content just as they would with any other source, ensuring transparency and academic integrity as per the IB Academic Integrity Guidelines.

- Students must understand both the potential and the limitations of AI tools, recognising the appropriate and inappropriate uses of these technologies:

- Appropriate Uses: Using AI for initial research, generating ideas, or getting suggestions.

- Inappropriate Uses: Relying on AI to produce final content or submitting AI-generated work as their own without proper acknowledgment, in line with the Cambridge Handbook 2023.

Teachers:

Supervising and Authenticating Student Work:- Teachers have a critical role in supervising the use of AI in student work and ensuring the authenticity of submissions:

- Regular Checks: Conduct regular checks of student drafts and progress to monitor the use of AI tools in coursework.

- Authentication: Ensure that the final submissions reflect the student’s own understanding and effort. This includes looking for signs of AI misuse, such as sudden changes in writing style or sophistication (Cambridge Handbook 2023).

- Teachers must provide guidance on the ethical use of AI tools and proper citation practices:

- Instruction on AI Ethics: Educate students on the ethical considerations surrounding AI use, including the importance of transparency and the potential for bias in AI-generated content.

- Citation Practices: Teach students how to properly cite AI-generated content and integrate it into their own work responsibly, as recommended by UNESCO.

School Administration:

Implementing AI Policies:- The school administration is responsible for establishing and enforcing comprehensive AI use guidelines:

- Policy Development: Develop clear policies that outline acceptable and unacceptable uses of AI in academic work.

- Enforcement: Ensure these policies are effectively communicated to all students, teachers, and staff and are rigorously enforced to maintain academic integrity (Niti National Strategy for AI).

- The administration must provide the necessary resources and training to support AI literacy among students and staff:

- Training Programs: Offer professional development opportunities for teachers to enhance their understanding of AI tools and their ethical use.

- Student Resources: Provide students with access to resources that help them understand and responsibly use AI technologies, including workshops, seminars, and online materials (UNESCO).

Key Actions for Administration:

- Resource Allocation: Ensure that there are sufficient resources available for AI education and training.

- Continuous Improvement: Regularly review and update AI use policies to keep pace with technological advancements and emerging ethical considerations.

6. Procedures for Handling Malpractice

Identifying AI Misuse:

Signs of Inappropriate AI Use:

Teachers and staff should be vigilant for signs that may indicate the inappropriate use of AI in student work. Key indicators include:- Inconsistencies in Writing Style: Sudden changes in vocabulary, syntax, or overall writing quality that do not match the student’s usual work.

- Unusual Patterns: Repetitive or overly sophisticated language, unexpected high accuracy in grammar and punctuation, or content that seems out of context.

- Content Discrepancies: Differences in the depth of analysis or logical flow that suggest parts of the work were generated by AI rather than written by the student (Cambridge Handbook 2023).

Tools and Methods for Detection:

To detect and address potential misuse of AI, a combination of technological tools and manual oversight is necessary. However, care should be taken not to base any final judgment on AI detection tools, as they are unreliable.- Plagiarism Detection Tools: Use software like Turnitin or other AI-specific detection tools sparingly to scan student submissions for signs of AI-generated content and potential plagiarism.

- Manual Checks: Teachers should manually review student work for inconsistencies and verify the originality of the content. This includes comparing submitted work with previous drafts and known samples of the student’s writing (IB Academic Integrity Guidelines). This is a better approach than using detection software.

Reporting and Investigation:

Steps for Reporting Suspected Malpractice:

- When AI misuse is suspected, it is crucial to follow a clear and structured process for reporting and investigation:

- For Teachers:

- Initial Review: Conduct a preliminary review to confirm suspicions. Look for patterns or discrepancies that indicate AI misuse.

- Documentation: Document the specific reasons for suspicion, including examples of inconsistencies or unusual patterns.

- Report: Submit a detailed report to the designated school authority, including all supporting evidence and documentation (Cambridge Handbook 2023).

- For Students:

- Self-Reporting: If a student realises they have inadvertently misused AI, they should report it to their teacher immediately.

- Peer Reporting: Students who suspect a peer of AI misuse should report their concerns confidentially to a teacher or school administrator.

Investigation Procedures and Consequences:

Once a report of suspected AI misuse is received, the following steps should be taken: Investigation:- Form an Investigation Committee: Include teachers, administrators, and, if necessary, external experts to ensure a fair and thorough investigation.

- Review Evidence: Examine the reported work, drafts, and any other relevant materials. Use plagiarism detection tools to support the investigation.

- Interview Involved Parties: Conduct interviews with the student(s) involved to understand their perspective and gather additional context.

- Determine Findings: Based on the investigation, determine whether malpractice has occurred.

- Apply Penalties: If malpractice is confirmed, apply appropriate penalties. These may range from requiring the student to redo the assignment to more severe academic consequences, such as disqualification from the assessment or course (IB Academic Integrity Guidelines).

- Provide Guidance: Offer support and guidance to the student to understand the implications of their actions and how to avoid such issues in the future.

Communication and Transparency:

- Inform Stakeholders: Ensure that all involved parties, including the student, parents, and relevant staff, are informed of the findings and the consequences.

- Maintain Records: Keep detailed records of all reports, investigations, and outcomes to ensure transparency and accountability.

7. Training and Capacity Building

AI Literacy Programs:

Our school is committed to establishing a strong foundation in AI literacy for both students and educators. We integrate comprehensive AI literacy programs designed to equip all members of our community with the necessary knowledge and skills to use AI responsibly and effectively.- Curriculum Development:

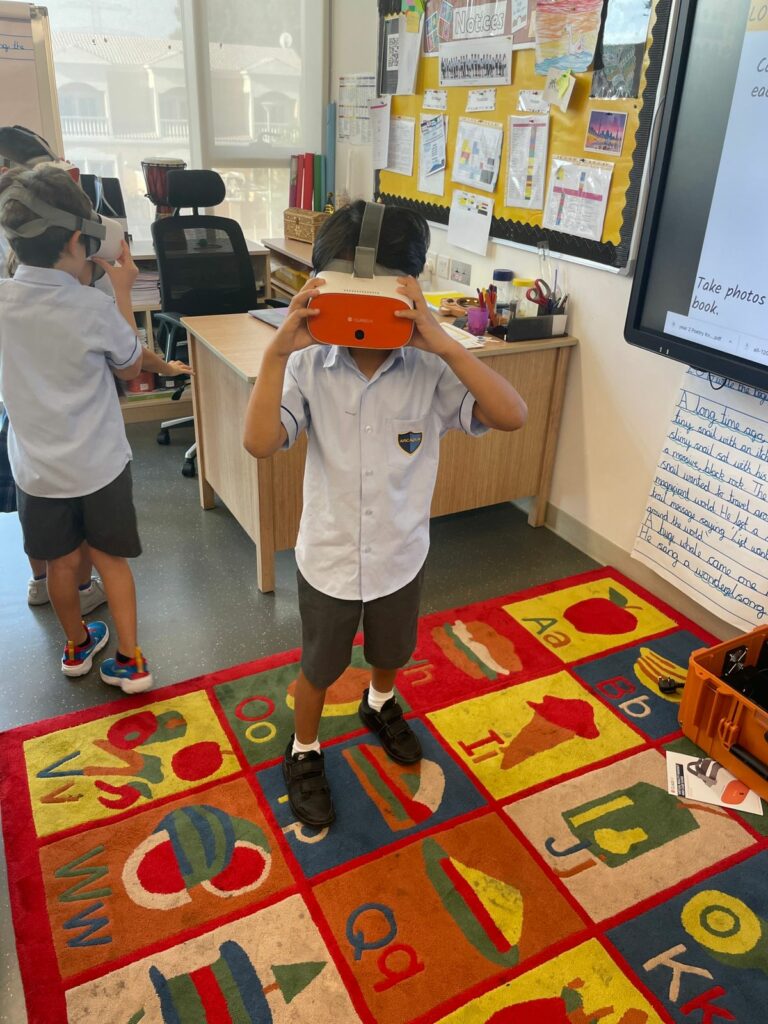

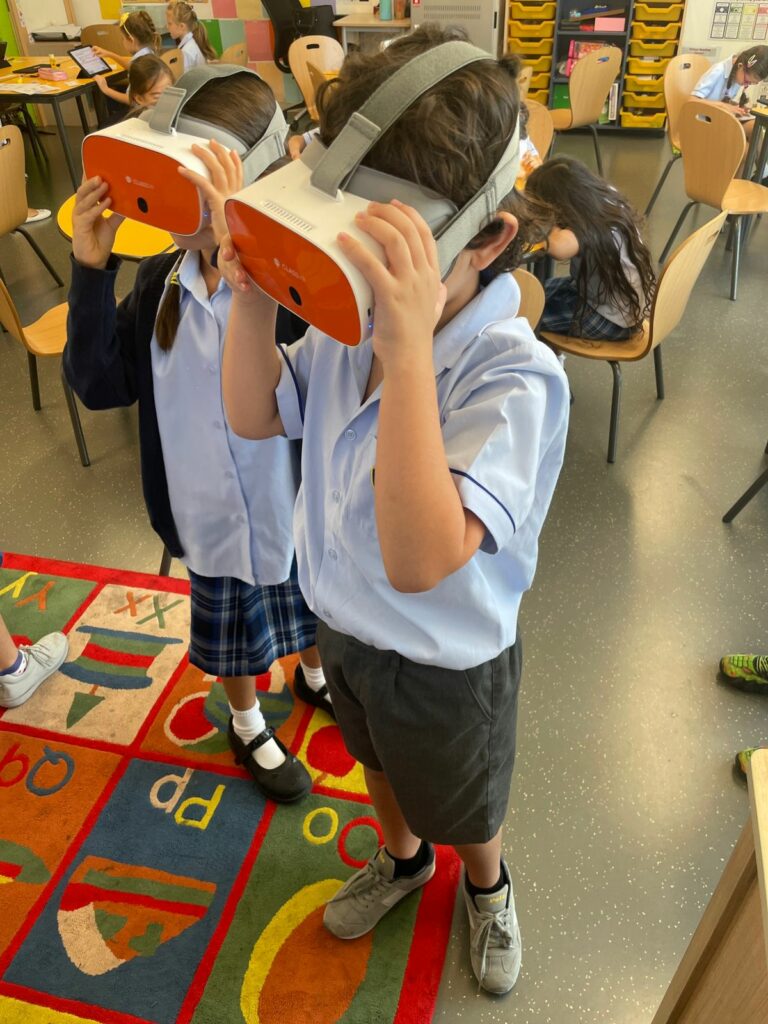

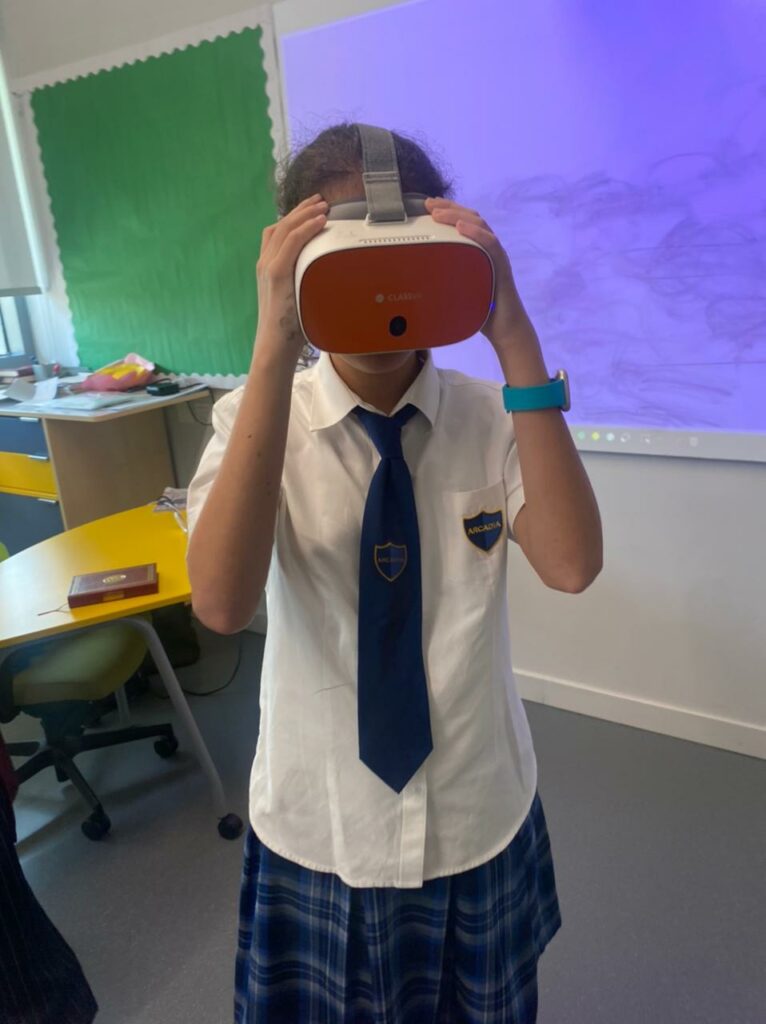

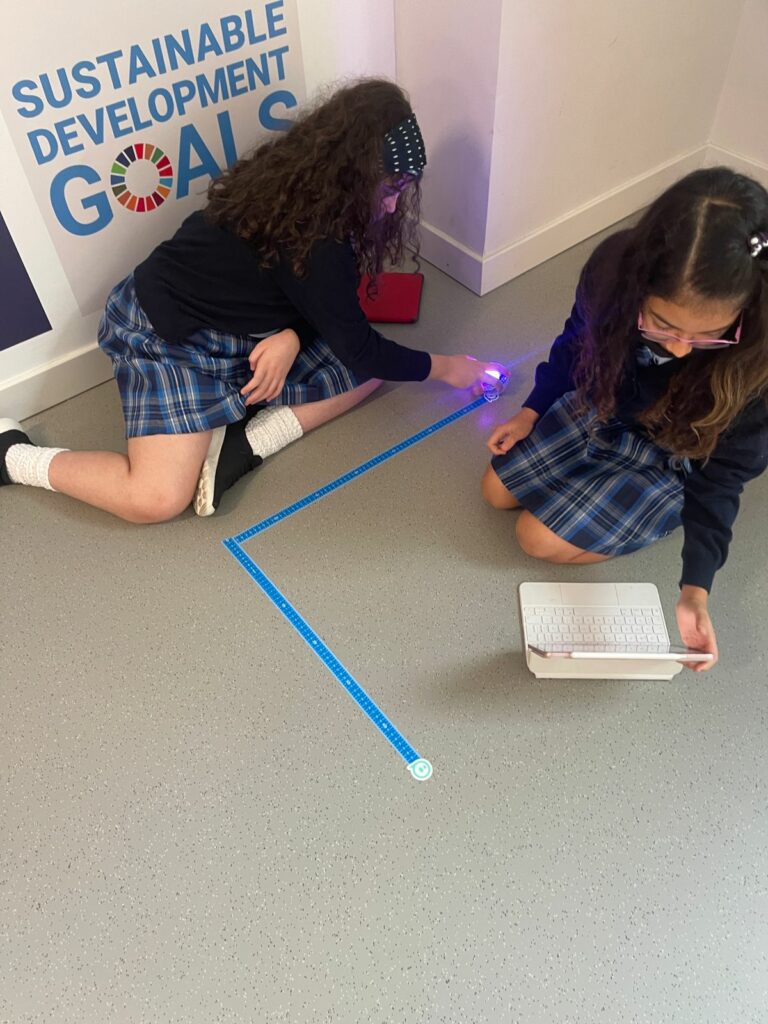

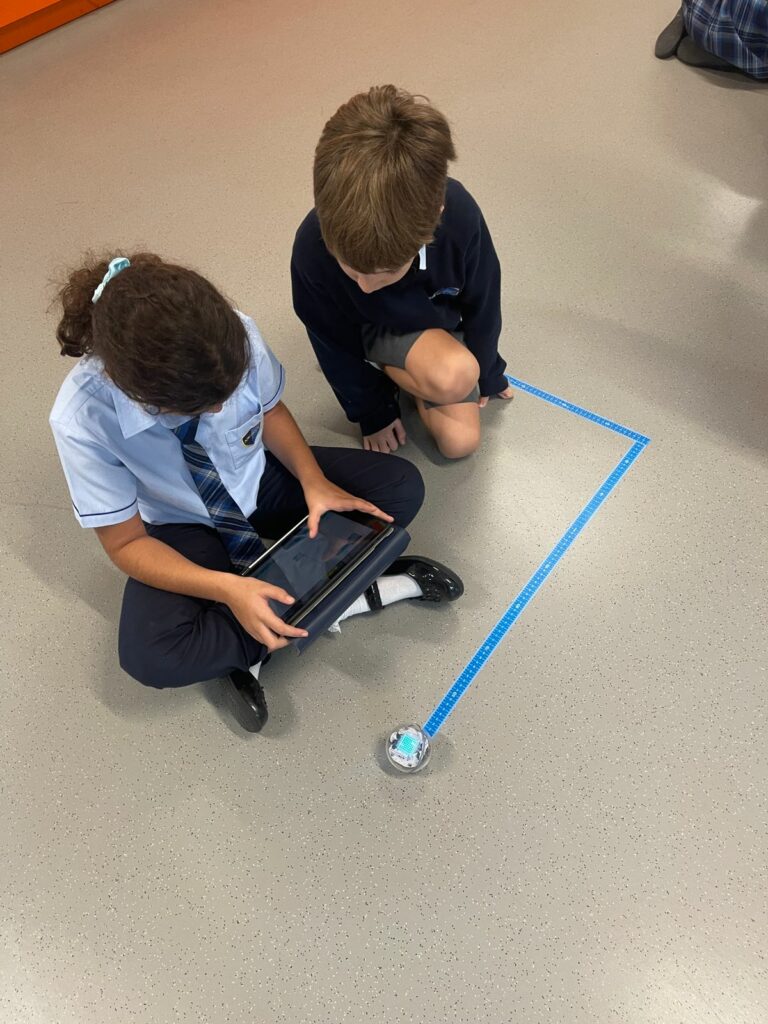

- For Students: We implement AI literacy curricula that cover the basics of AI technologies, their applications, and ethical considerations. This includes understanding how AI works, its potential benefits, and the challenges associated with its use. The curriculum is age-appropriate and integrated into existing subjects where applicable.

- For Educators: We provide educators with a thorough understanding of AI principles and technologies, enabling them to teach AI literacy effectively. This training covers how to integrate AI concepts into their teaching and how to guide students in using AI tools ethically and responsibly (Niti National Strategy for AI).

Professional Development for Teachers:

We ensure that teachers remain knowledgeable about the latest AI tools and ethical guidelines through regular and ongoing professional development.- Training Sessions:

- We organise regular training sessions for teachers focused on the latest developments in AI technology and its applications in education.

- Our training includes workshops on ethical AI use, methods for supervising and authenticating student work, and strategies for incorporating AI into the classroom in ways that enhance learning without compromising academic integrity (UNESCO AI Policy Makers Guidance).

- Each teacher at Arcadia British School has their own EdTech pathway, which is supported by the school.

- Ethical Guidelines:

- We provide comprehensive training on the ethical implications of AI use in education. This includes understanding data privacy issues, the potential for bias in AI systems, and the importance of transparency and accountability in AI use.

- We equip teachers with the tools and knowledge to identify and address AI misuse and to foster an environment of ethical AI use among students.

Resources for Students:

We ensure that students have access to the right resources to effectively learn about and use AI technologies.- Educational Materials:

- We develop and distribute educational materials that provide students with a clear understanding of AI technologies, their uses, and the ethical considerations involved. These materials include guides, tutorials, and case studies that illustrate both the potential and the limitations of AI (UNESCO).

- Support Materials:

- We provide students with access to AI tools and platforms for learning and experimentation, ensuring these resources come with appropriate guidelines on ethical use and proper citation practices.

- We offer additional support materials, such as online courses, webinars, and workshops, that allow students to deepen their understanding of AI and its applications in various fields.

Implementation and Monitoring:

We ensure effective implementation and continuous improvement of our AI literacy initiatives.- Resource Allocation:

- We allocate sufficient resources for the development and delivery of AI literacy programs and professional development for teachers.

- We invest in the necessary technological infrastructure to support AI learning, including access to AI tools and platforms.

- Feedback and Continuous Improvement:

- We collect feedback from students and teachers on the effectiveness of AI literacy programs and training sessions. This feedback is used to continuously improve and update the curricula and training methods.

- We monitor the integration of AI literacy into the school curriculum and its impact on teaching and learning outcomes.

8. Monitoring and Review

Regular Policy Review:

To ensure that our AI policies remain relevant and effective, we conduct regular reviews and updates.- We have established a schedule for periodic reviews of the AI Acceptable Use Policy. These reviews occur annually, or more frequently if there are significant advancements in AI technology or changes in educational practices.

- During each review, we assess the current policy’s effectiveness, identify areas for improvement, and make necessary updates to address new challenges and opportunities presented by evolving AI technologies (Cambridge Handbook 2023).

- Stakeholder Involvement:

- We involve a broad range of stakeholders in the review process, including students, teachers, school administrators, and AI experts. This ensures that diverse perspectives are considered and that the policy reflects the needs and experiences of the entire school community.

Monitoring AI Use and Compliance:

We consistently monitor AI use across the school to ensure adherence to the policy and maintain academic integrity.- Tracking AI Use:

- We have implemented systems to track and monitor how AI tools are being used by students and staff. This includes logging AI tool access, usage patterns, and the context in which AI is applied in academic work.

- We regularly review these logs to identify any potential misuse or trends that may require intervention or additional guidance (Cambridge Handbook 2023).

- Compliance Checks:

- We conduct routine compliance checks to ensure that all users are following the established guidelines for AI use. These checks include reviewing student submissions for proper citation of AI-generated content and verifying that teachers are supervising and authenticating student work appropriately.

- We use plagiarism detection tools and manual checks as part of the compliance process to detect any instances of AI misuse or academic malpractice.

Feedback and Continuous Improvement:

We gather and act on feedback to continuously improve AI policies and practices.- Feedback Collection:

- We have created mechanisms for students and teachers to provide feedback on the AI policy and its implementation. This includes surveys, focus groups, and suggestion boxes.

- We encourage open communication and make it easy for stakeholders to share their experiences and suggestions for improvement (UNESCO AI Policy Makers Guidance).

- Continuous Improvement:

- We regularly analyse the feedback collected to identify common issues, areas for improvement, and successful practices. We use this analysis to make data-driven decisions about policy adjustments and enhancements.

- We update the AI policy based on feedback and the outcomes of regular reviews. We ensure that any changes are communicated clearly to all members of the school community and that support is provided to help them adapt to new guidelines.

Key Actions for Effective Monitoring and Review:

- Review Committee: We have formed a dedicated committee responsible for overseeing the regular review and monitoring of the AI policy. This committee includes representatives from different stakeholder groups.

- Documentation and Reporting: We maintain detailed records of all reviews, monitoring activities, and feedback collected. We produce regular reports on the state of AI use and compliance within the school.

- Continuous Training: We provide ongoing training and updates for students and staff to ensure they understand and can effectively implement the latest AI policies and best practices.